Optimism just announced the next chapter in their pursuit towards an EVM equivalent optimistic rollup. The announcement detailed plans for governance, a token, and retroactive public goods funding. The core goal of Ethereum rollups has always been to provide further scale to applications, resulting in cheaper fees for users. However, for rollups to reach their potential they need to go beyond by implementing more enhancements that optimize performance.

Parallel execution

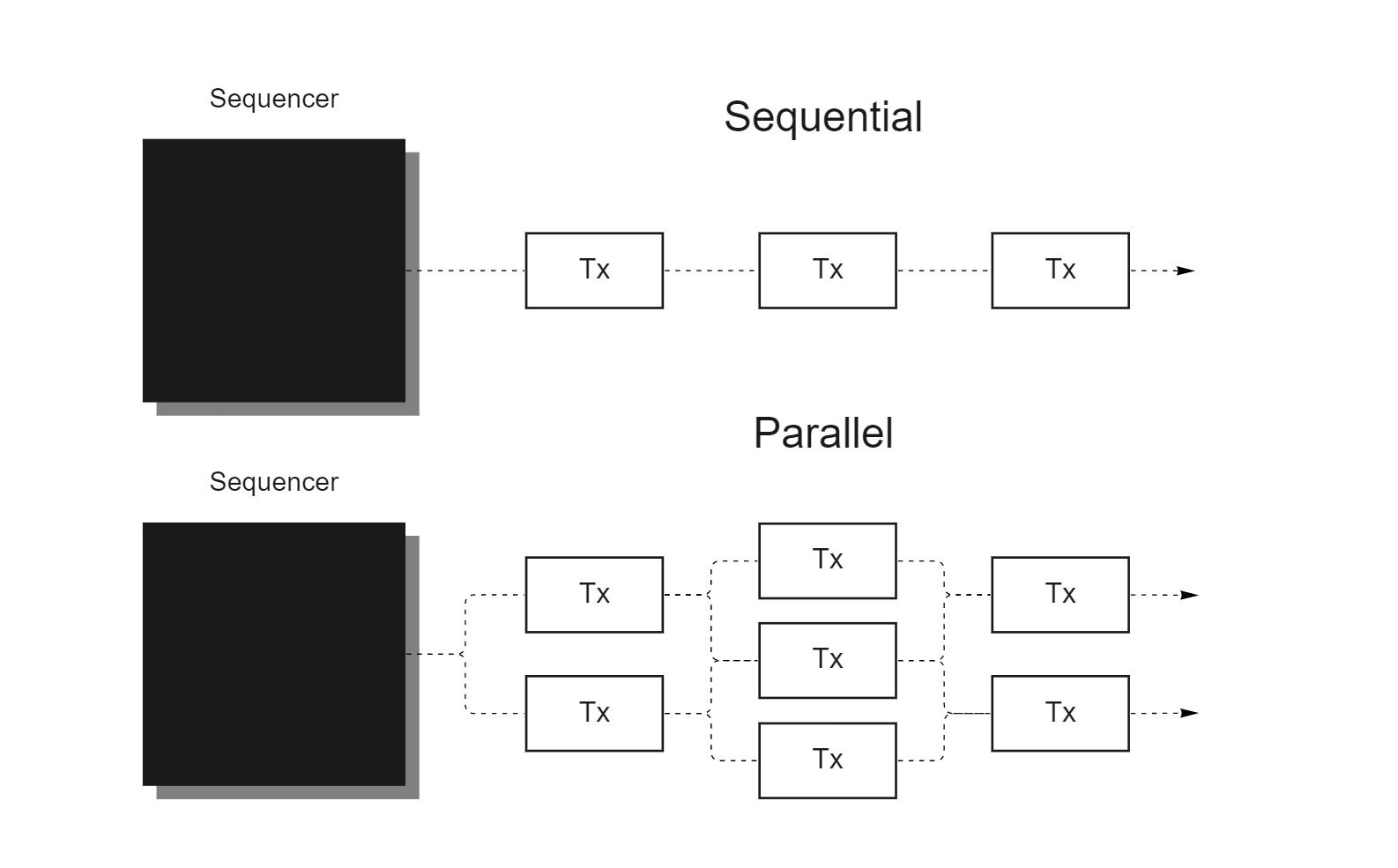

Processing transactions in parallel is one of the largest improvements that rollups can make to drastically increase scalability. Executing transactions sequentially can only take rollups so far. Since most rollups use the account model, parallel execution can be implemented through access lists. By requiring each transaction to display a list of all parts of the state that are accessed, the rollup sequencer can execute transactions that access different parts of the state in parallel. Those that touch the same state get executed sequentially.

Scaling execution is vital as data throughput will soon get relieved as a bottleneck with purpose-built data availability layers like Celestia. With sufficient data throughput, the primary bottleneck that will cap a rollup’s throughput will be its execution capacity.

New execution environments

Rollups originally arose as a scaling solution for Ethereum. Because of this, many rollups strove to become highly compatible with the EVM, reducing the friction in onboarding applications. While EVM compatibility is beneficial for Ethereum developers, the EVM has many features that limit its performance. As such, EVM compatible rollups also inherit the EVM’s limitations, and therefore reduce theoretical throughput.

To alleviate the limitations of the EVM rollups can implement new execution environments that are purpose-built to scale. Ultimately, more optimized execution environments are required for rollups to strongly surpass the throughput of monolithic chains.

Reduce state and history size

Rollups aren’t immune to state bloat. While initially they will have small state sizes, continual growth will affect performance and decentralization if ignored. Ethereum exemplifies this problem, as state size has become its largest bottleneck to increasing throughput, currently 130GB. The large size causes hardware requirements to increase, affecting decentralization, and increases the cost of performing state accesses.

Rollups can tackle state management primarily using two different methods; state expiry or statelessness. State expiry prunes old state past a predetermined point. Pruning can occur once or at set intervals (e.g. once per year). Nodes aren’t required to store state once it is pruned, reducing hardware requirements.

State rent is another type of state expiry, where the expiry period occurs once an account fails to pay rent to maintain its part of the state. Statelessness removes the responsibility for nodes to store the state, and comes in two flavours:

- Weak statelessness: Only block producers are required to store the state.

- Strong statelessness: No node is required to store the state.

Although, statelessness is more technically complicated as nodes without the state require a witness to verify blocks, which can be large if the state tree doesn’t produce compact witnesses. Difficulty of implementing statelessness also stems from the need for nodes to access witnesses to verify blocks.

The history growth problem, unlike state growth, is directly linked to the amount of activity in the network – state growth is variable depending on how the transaction interacts with the state. The most effective way to deal with history is to implement some type of history expiry, where history past a certain point is pruned and nodes aren’t required to serve that data within the network. Both state and history expiry raise the issue of retrievability for expired data. However, rollups can be more aggressive than a monolithic chain with expiry as their state can be reconstructed from the base layer. Ideally, there needs to be a protocol that serves as a backup to retrieve expired data – something similar to Arweave or Filecoin. Without a method to guarantee data retrievability at scale, there’s the possibility that expired state or history can become irretrievable.

Extra optimizations

Optimistic rollups

Fraud proofs play a key role in the security of optimistic rollups. To reduce limitations, the fraud proof scheme can be optimized. Single round fraud proofs are a simple construction; the block in question is re-executed and the resulting state root is compared to the state root that was submitted. Any difference between the two state roots proves that the sequencer committed an invalid block and can get punished accordingly. However, the limitation with single-round fraud proofs is that the size of the rollup block can’t be larger than the block size of the base layer. Alternatively, rollups can have larger blocks if they use interactive verification games (IVGs). The prover and verifier do a bisection game where they narrow down the block to the specific transactions that are disputed, which are then sent on-chain for re-execution – resulting in smaller fraud proofs.

Zk-rollups

Proof generation is expensive. To reduce the cost of proving, a set of provers can run in parallel. For example, instead of generating a single proof per block, have 50 provers generate in parallel a proof for 1/50th of the block. Then recursively aggregate them until a single proof is left. Each prover only bares approximately 1/50th the cost versus a single prover bearing the entire cost. While there’s still difficulty around implementing parallel proof generation, zk-rollups need to decrease proving costs to achieve sufficient decentralization and censorship resistance.